Scientific context

One of the long-lasting question in modern astrophysics is related to the inefficiency of the star formation process in galaxies. Given the amount of interstellar gas present in galaxies, one would expect the formation of about 100 times more stars in galaxies than what is observed. Many physical processes holding the gravitational collapse of interstellar clouds where stars are born have been proposed but there is still no consensus about the exact scenario that converts gas into stars. In fact the evolution of matter in galaxies is a complex, self-organized system. The physics of the interstellar medium is complex, with several (dynamical, chemical, thermal) instabilities coupled to magneto-hydrodynamical turbulence, gravity and energy injection by stars themselves. This multi-scale and multi-phase physics is now studied using massive numerical simulations. Great progresses have been made in the last 15 years to a point that we are now in a situation where observational constraints are lacking in order to identify the plausible physical scenario. This is partly caused by the difficulty we have to extract physical information from the observations and by the limited ways we have to compare them to numerical simulations.

Scientific challenges

This field of research faces several important scientific locks. One challenge is related to our ability to identify coherent structures in hyper-spectral data, in a non-biased way and down to the noise level. The difficulty comes from the fact that there is not a bijection between the velocity axis of the data and the spatial dimension perpendicular to the plane of the sky. Clouds occupying different volumes in real three-dimensional space can overlap in PPV space. Therefore the problem is not only to identify isolated structures in PPV space but to deal with the velocity overlap / confusion, and estimate the quality of the reconstruction based on criteria learned from the physics of the media under study using numerical simulations.

A second challenge is the rapid increase of the size of data. Datasets are now reaching levels that require changes in the way we think algorithms and their implementation on computing architectures. Huge datasets are already being dealt with for telescopes like the Atacama Large Millimetre Array (ALMA) but this is still several orders of magnitude less than what we will face in a few years with the Square Kilometre Array (SKA).

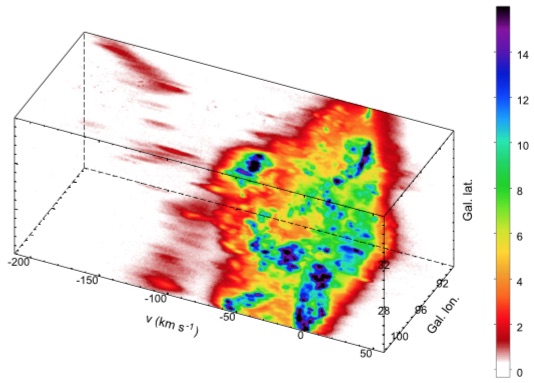

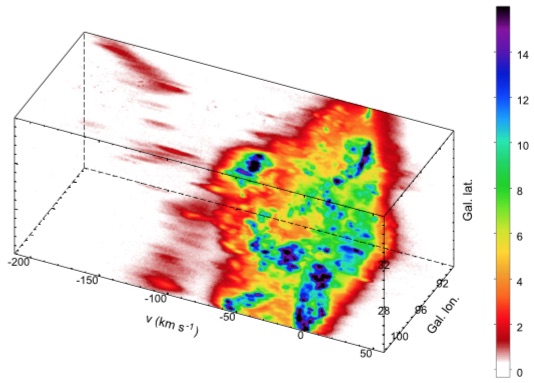

21 cm PPV cube of a local HI cloud

Even though the velocity information helps tremendously to separate sources of emission along the line of sight, the multi-scale nature of the interstellar medium makes the structure identification process difficult. Nevertheless this is a necessary step in order to estimate the physical properties (size, mass, dynamics, temperature, density…) of clouds from which stars form.

Martin et al. (2015)

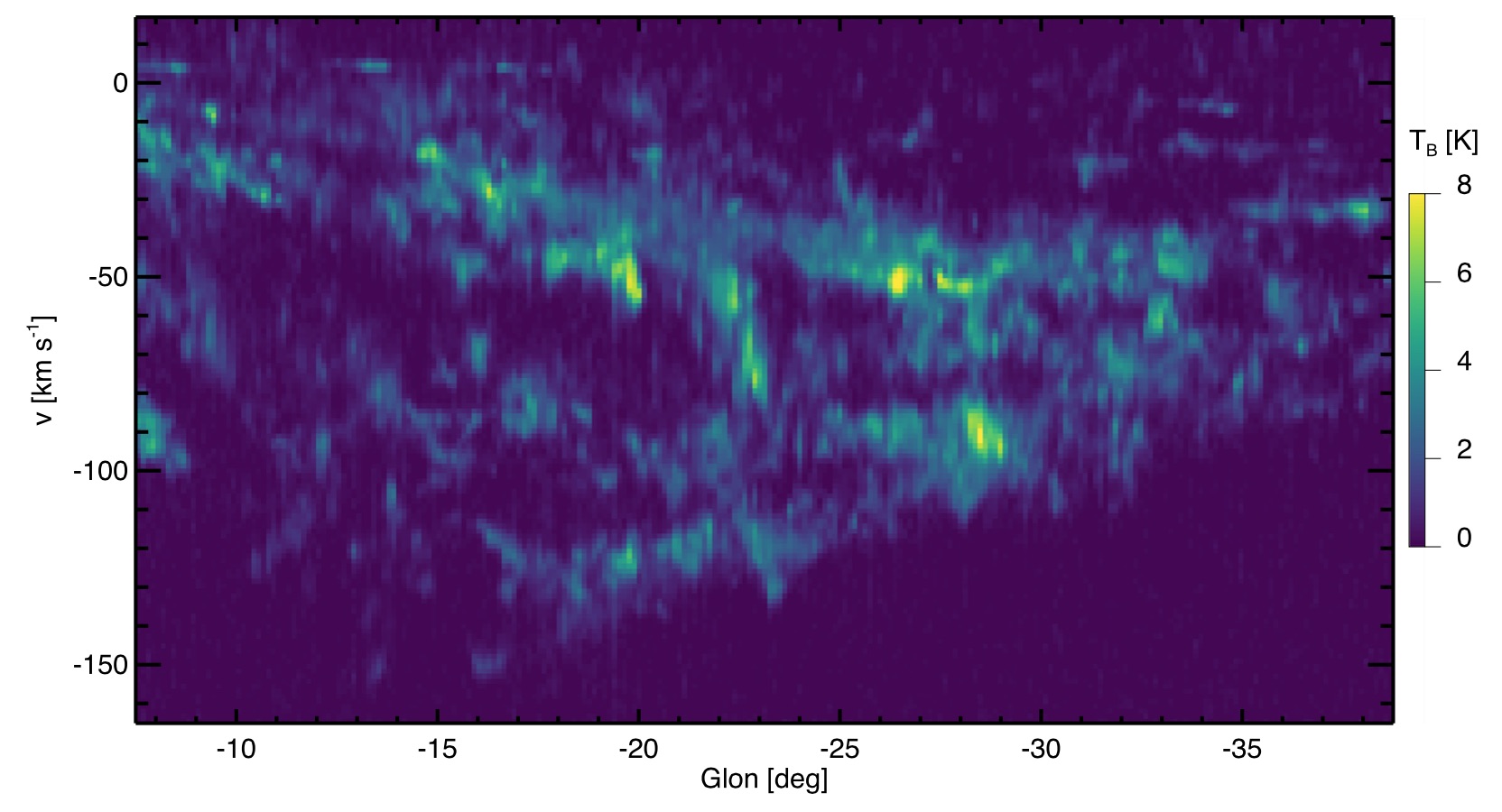

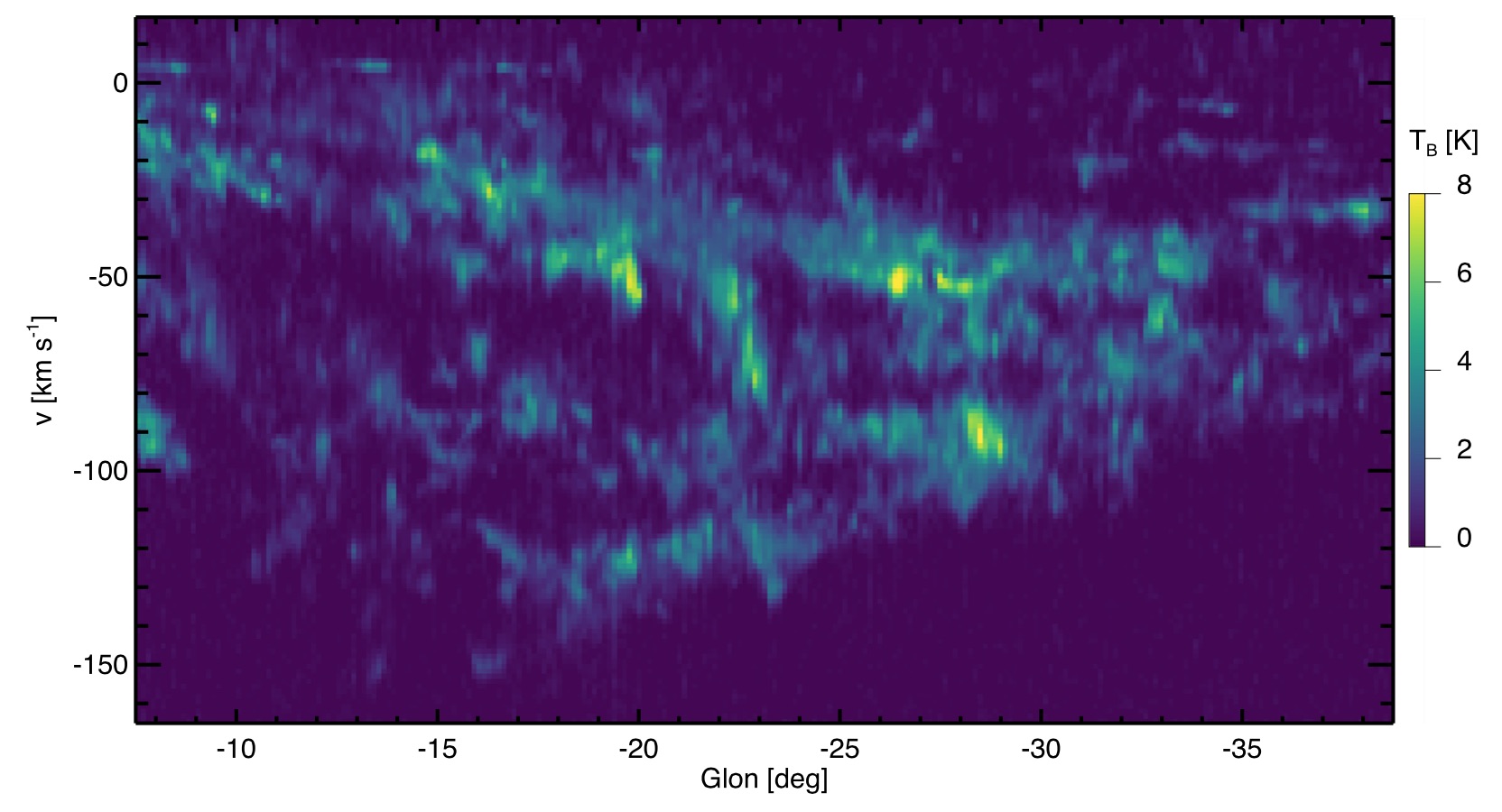

2D slice of a CO cube of a section of the Galactic plane

Up to very recently, because of the lack of adapted segmentation methods, only the brightest clouds, and therefore the most massive ones, were extracted from such data, giving a very biased view of interstellar clouds and limiting our capacity to build a proper theory of star formation.

Miville-Deschenes et al. (2017)